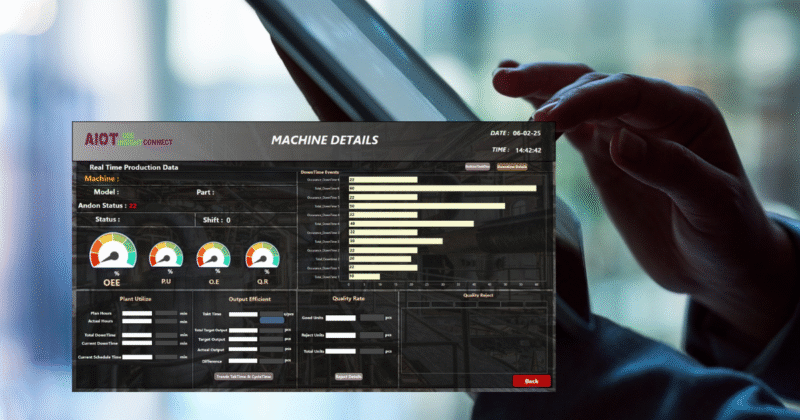

Non intrusive Data Extraction Edge AI production efficiency Tracking

Innovation in non instrusive data extraction

Shop floor data acquisition challeges

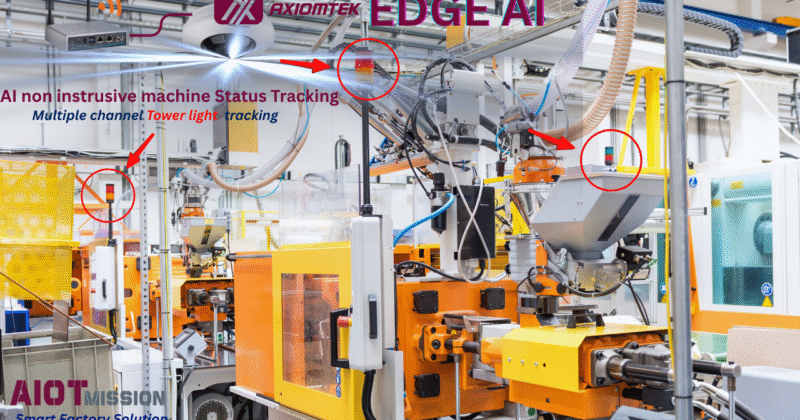

One of the biggest challenges in capturing data from the production floor is acquiring the necessary information from machines that are actively running. The most effective approach is to use high-level communication via standard protocols such as Modbus or other PLC communication protocols. However, in many cases, the required data is either unavailable or originally retained by the machine's vendor.Moreover, some machines cannot afford to be shut down due to high production demand.Look to the right for a solution ➝

Learn More

QuThe solution :-

Non Intrusive Edge AI Data Extraction

See How

Today’s industrial challenges require agile solutions. Our cutting-edge data extraction technology lets you monitor progress seamlessly, ensuring enhanced productivity without disruption.

“WTF EYE” – What The Fish Eye is a computer vision AI solution developed by the AIoTmission AI Team to address a common challenge on the production floor: machines that cannot be stopped and where traditional data interfacing methods are...