Fog computing Vs Cloud

The recent live session, held on March 29th, delved into the comparison between Fog computing and Cloud computing. A dedicated team from AIoTmission highlighted the significance of the fog computing layer within the Edge-to-Cloud computing architecture.

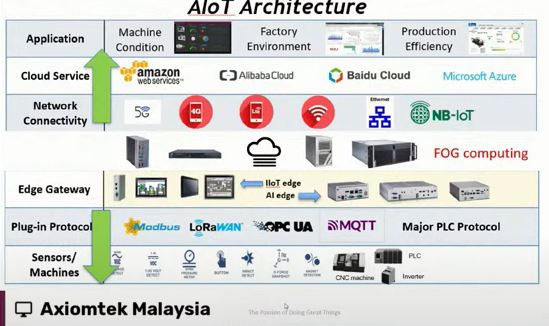

In typical AI and IoT architecture diagrams, the Fog computing layer often remains inconspicuous. This is primarily because fog computing devices or systems are typically integrated into the existing infrastructure, with the exception of new features like data analytics, which are more apparent due to advancements in AI technology.

The key differences among Edge, Fog, and Cloud computing were discussed during the session. Additionally, the main role of Fog computing in enhancing both edge and cloud computing was examined in detail.

These topics were thoroughly explored in episode 42 of the “Sembang AIoT” series.

Edge, Fog, and Cloud computing are three distinct paradigms in the realm of distributed computing, each serving unique purposes and offering specific advantages. Here are the main differences between them:

Location and Proximity:

Edge Computing: Edge computing involves processing data locally on devices that are closer to the data source, such as sensors, IoT devices, or end-user devices. The processing occurs at or near the data source, reducing latency and bandwidth usage.

Fog Computing: Fog computing extends the capabilities of edge computing by introducing an intermediate layer of computing resources between edge devices and the cloud. Fog nodes are placed closer to the edge devices within the same network infrastructure, providing additional processing, storage, and networking capabilities.

Cloud Computing: Cloud computing involves the centralized provision of computing resources over the internet. Data processing, storage, and other computing tasks occur on remote servers maintained by cloud service providers, typically located in data centers.

Latency and Response Time:

Edge Computing: Edge computing offers the lowest latency since data processing occurs locally, allowing for real-time or near-real-time responses to events.

Fog Computing: Fog computing reduces latency compared to cloud computing by processing data closer to the edge devices. While not as low-latency as edge computing, it offers faster response times compared to sending data to centralized cloud servers.

Cloud Computing: Cloud computing may introduce higher latency due to data transmission to and from remote servers, especially for applications requiring real-time processing.

Scalability and Resource Availability:

Edge Computing: Edge devices typically have limited computing resources, making scalability challenging for resource-intensive applications.

Fog Computing: Fog computing provides more scalability compared to edge computing by adding an additional layer of computing resources. Fog nodes can dynamically allocate resources based on demand, offering greater scalability for distributed applications.

Cloud Computing: Cloud computing offers virtually unlimited scalability, with cloud service providers managing large data centers equipped with massive computing and storage capacities. Cloud resources can be easily scaled up or down based on demand.

Data Security and Privacy:

Edge Computing: Since data processing occurs locally, edge computing may offer enhanced data security and privacy by reducing the need to transmit sensitive data over networks.

Fog Computing: Fog computing introduces additional security considerations compared to edge computing, as data may be processed and stored on intermediate fog nodes. However, proper security measures can be implemented to mitigate risks.

Cloud Computing: Cloud computing raises concerns regarding data security and privacy, especially when transmitting sensitive data over the internet to remote servers. Cloud service providers implement various security measures to protect data, but data breaches remain a potential risk.

In summary, the main differences between Edge, Fog, and Cloud computing lie in their location, latency, scalability, and security characteristics. Each paradigm offers distinct advantages and trade-offs, and the choice between them depends on the specific requirements and constraints of the application or use case.

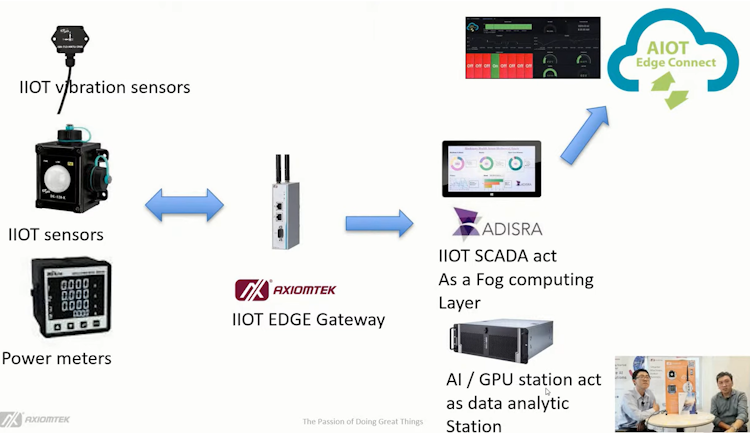

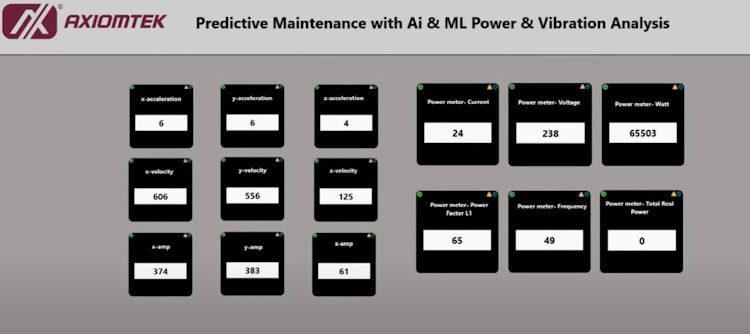

We demonstrated how SCADA in this case, The ADisra SCADA package is used to perform the data exchange between the Edge and the cloud. We have power meters data and virbation data being collected by the edge IOT gateway. Data is then push to the Fog nodes, in this case the Adisra SCADA server. The data is presented and analyzed by Adisra SCADA before publishing this to the Cloud.

In this type of setup, predictive maintenance can be performed at the SCADA level with the assist of additional ML AI computing and the decision can be made upon the data analytic is completed. This demonstrate the power of fog computing in the real world.

SCADA (Supervisory Control and Data Acquisition) servers can be configured to act as fog nodes in smart manufacturing environments, providing additional processing, storage, and networking capabilities at the edge of the network. Here’s how SCADA servers can serve as fog nodes:

Local Data Processing: SCADA servers are typically equipped with computing resources capable of processing data locally. In smart manufacturing, SCADA servers can analyze data from sensors, PLCs (Programmable Logic Controllers), and other devices in real-time, without needing to transmit all data to centralized cloud servers.

Real-time Control and Monitoring: SCADA systems are designed for real-time control and monitoring of industrial processes. By acting as fog nodes, SCADA servers can execute control algorithms, perform data filtering, and generate immediate responses to events occurring on the factory floor.

Edge Analytics: SCADA servers can host analytics software capable of running advanced algorithms for predictive maintenance, anomaly detection, and optimization of manufacturing processes. These analytics can be performed locally on the SCADA server, leveraging historical data and machine learning models to generate insights at the edge.

Integration with Edge Devices: SCADA servers can communicate directly with edge devices such as PLCs, HMIs (Human-Machine Interfaces), and sensors. This integration allows SCADA servers to collect data from distributed devices, coordinate control actions, and exchange information with other fog nodes or cloud servers as needed.

Redundancy and Fault Tolerance: SCADA systems often incorporate redundancy and fault tolerance mechanisms to ensure continuous operation in industrial environments. SCADA servers acting as fog nodes can provide local redundancy, fault detection, and failover capabilities, minimizing disruptions to manufacturing processes.

Secure Communication: SCADA servers implement secure communication protocols to exchange data with edge devices and other components of the industrial control system. This ensures the integrity, confidentiality, and availability of data transmitted between fog nodes and other networked devices.

In summary, SCADA servers can effectively serve as fog nodes in smart manufacturing environments, extending the capabilities of edge computing by providing local processing, control, analytics, and communication functions. By distributing computing resources closer to the edge of the network, SCADA servers help optimize industrial processes, enhance real-time decision-making, and improve overall system performance and resilience.

To watch our live at the youtube channel follow the link below:-

https://youtube.com/live/eKB90TcVi2c

AIoTmission Sdn Bhd, established in 2022 as a subsidiary of Axiomtek (M) Sdn Bhd, is a leading provider of technological training and consultancy services specializing in Artificial Intelligence (AI) and Industrial Internet of Things (IIoT) solutions. Our mission is to drive the Fourth Industrial Revolution (IR4.0) and facilitate digital transformation across Southeast Asia, including Malaysia, Singapore, Indonesia, the Philippines, Thailand, Vietnam, and Myanmar.

At AIoTmission, we are dedicated to advancing research and development in AI and IIoT technologies, with a focus on industrial applications such as sensors, gateways, wireless communications, machine learning, AI deep learning, and Big Data cloud solutions. Through collaboration with our valued clients and partners, we deliver innovative solutions tailored to industry needs, enhancing technological capabilities and operational efficiency.